In 1984, the Lisp Machine—a specialized computer hailed as the future of AI—was selling for $100,000. By 1987, its manufacturer, Symbolics, filed for bankruptcy. This wasn’t an anomaly. Twice in AI’s history, unbridled optimism collided with brutal reality, triggering eras known as AI Winters: periods when funding vanished, careers stalled, and the field nearly died. Yet, these winters forged today’s AI revolution. This post explores how overpromising, policy failures, and quiet persistence reshaped AI into the resilient force powering ChatGPT and self-driving cars.

The Hype Cycle Trap

The history of artificial intelligence (AI) is a story of high expectations, crushing disappointments, and eventual resurgence. From its early days, AI research has gone through cycles of extreme optimism followed by periods of disillusionment, known as “AI winters.” These winters, while challenging, played a crucial role in shaping AI into what it is today. This post explores these cycles, detailing why they happened, how AI survived during these difficult times, and what lessons can be drawn for today’s AI boom.

The Gartner Hype Cycle is a useful framework for understanding these shifts. It describes the typical progression of emerging technologies, which includes:

- Innovation Trigger: A new breakthrough sparks excitement and investment.

- Peak of Inflated Expectations: Exaggerated claims lead to excessive optimism.

- Trough of Disillusionment: When the technology fails to deliver, funding dries up, and interest wanes.

- Slope of Enlightenment: Real, practical applications emerge, proving the technology’s value.

- Plateau of Productivity: The technology becomes mainstream and widely adopted.

AI has gone through this cycle multiple times, and understanding its history can help predict its future. Let’s explore the AI winters and how each setback led to eventual breakthroughs.

The First AI Winter (1974–1980): Lighthill’s Brutal Takedown

The first major AI winter took place in the 1970s, primarily due to the Lighthill Report, a critical assessment of AI’s progress. Commissioned by the British government, the report, written by mathematician Sir James Lighthill in 1973, argued that AI research had failed to meet its ambitious goals. The report concluded that AI’s practical applications were limited, and further investment was unlikely to yield significant returns.

The Lighthill Report had devastating consequences:

- Funding cuts: The UK government drastically reduced financial support for AI research.

- Reduced academic interest: AI research programs were scaled back or abandoned.

- Shift in focus: Many researchers moved toward more feasible projects, such as expert systems.

The AI community was caught off guard. In the 1950s and 60s, there was widespread optimism that AI would soon match human intelligence. Early AI programs, such as those developed by Allen Newell and Herbert Simon, demonstrated problem-solving capabilities, but these programs worked only in highly controlled environments. The real world, with its complexity and unpredictability, proved to be far more challenging.

The Optimism Bubble

The 1956 Dartmouth Conference had set grand expectations: human-like AI within a decade. By the 1960s, progress stalled. Early successes like the Logic Theorist (1956) and ELIZA (1966) were narrow and brittle. Governments and investors grew impatient.

The Lighthill Report (1973)

- Commissioned by: The UK Science Research Council.

- Authored by: Sir James Lighthill, a renowned fluid dynamicist with no AI background.

- Key Criticisms:

- AI had failed to deliver “general-purpose” intelligence.

- Early successes (e.g., chess programs) were limited to “toy problems.”

- Most damning: “No major advance can be predicted.”

Impact

- UK Funding Cuts: AI research budgets slashed by 80%.

- Global Ripple Effect: The U.S. Defense Advanced Research Projects Agency (DARPA) withdrew support for academic AI projects.

- Cultural Shift: AI became a dirty word in academia. Researchers rebranded as “machine learning” or “informatics.”

Survival Tactics

- Expert Systems: Programs like MYCIN (1976), which diagnosed blood infections with 65% accuracy (better than human doctors), kept AI relevant in niche domains.

- Stealth Research: Universities embedded AI work into robotics and computer vision projects to avoid the “AI” label.

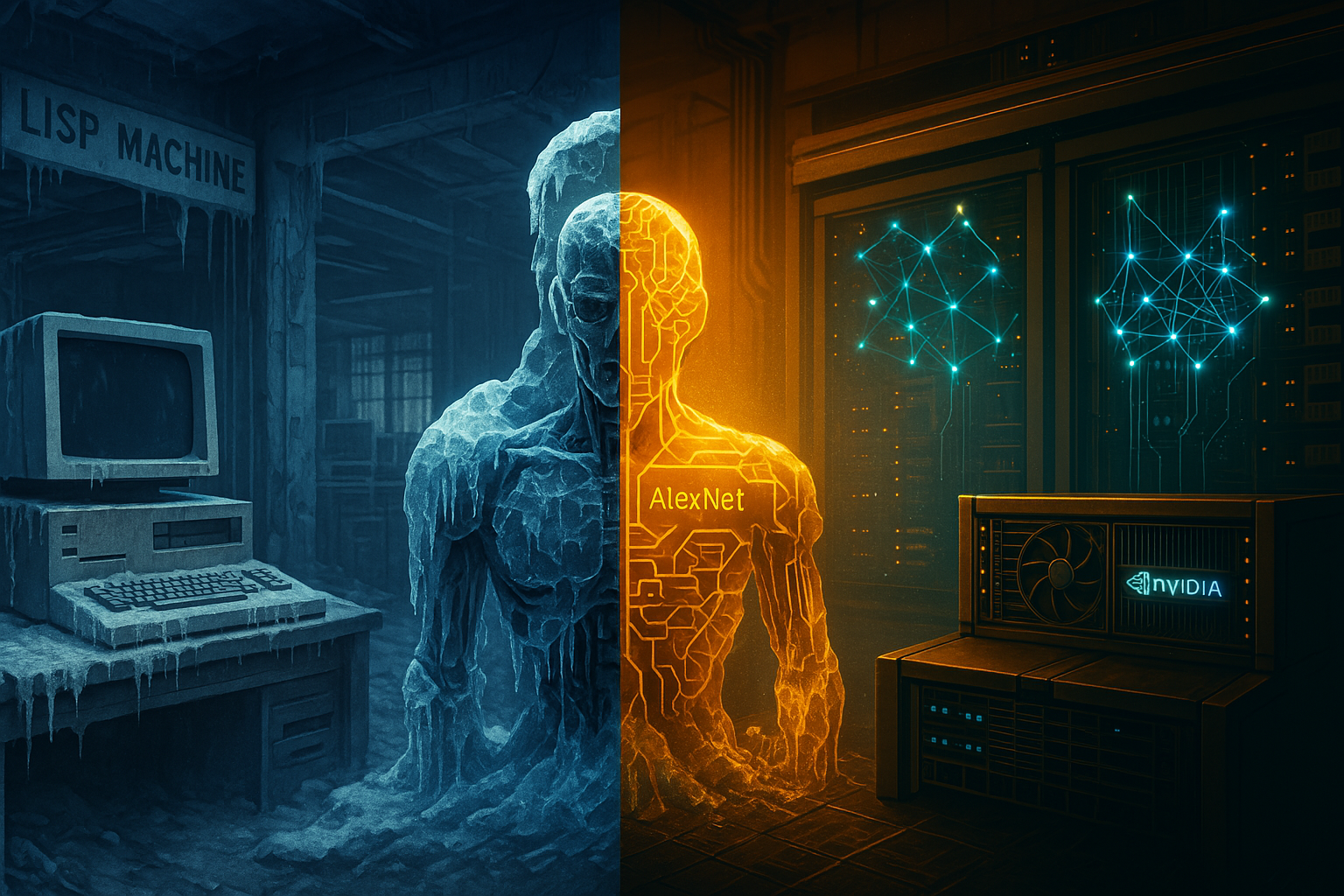

The Second AI Winter (1987–1993): The Collapse of the Lisp Machine and the Silent Revolution

The Rise and Fall of Symbolic AI

1. Lisp Machines: The Promise and the Pitfalls

In the early 1980s, Lisp machines were hailed as the future of artificial intelligence. These specialized computers, built by companies like Symbolics, Lisp Machines Inc., and Texas Instruments, were optimized to run Lisp (LISt Processing), the dominant programming language of AI research at the time.

- Why Lisp?

- Lisp’s flexibility made it ideal for AI experimentation, allowing researchers to manipulate symbolic logic efficiently.

- Early AI pioneers, including John McCarthy (who invented Lisp in 1958), believed that symbolic reasoning (rule-based AI) was the key to human-like intelligence.

- The Hype

- Companies marketed Lisp machines as “AI workstations” that would bring artificial intelligence into mainstream business.

- Symbolics even claimed their machines could “think like humans”—a dangerous overpromise.

- The Reality Check

- By 1987, general-purpose UNIX workstations (like those from Sun Microsystems) became cheaper and faster than Lisp machines.

- Businesses realized they didn’t need expensive specialized hardware—they could run AI experiments on standard computers.

- Demand collapsed, and by the early 1990s, companies like Symbolics went bankrupt.

2. The XCON Disaster: When Expert Systems Failed to Scale

What Was XCON?

XCON (eXpert CONfigurer) was one of the most famous expert systems of the 1980s. Developed by Digital Equipment Corporation (DEC), it was designed to automate the configuration of computer orders—a complex task that required deep technical knowledge.

- Initial Success (1980–1986)

- XCON saved DEC $40 million per year by reducing configuration errors.

- It was so successful that DEC built additional expert systems for sales and customer support.

- The Downfall (1987–1993)

- Rule Explosion: XCON relied on over 10,000 hand-coded rules to function. Every new DEC product required manual updates to the system.

- Maintenance Nightmare: By the late 1980s, keeping XCON updated cost more than hiring human engineers.

- The Final Blow: In 1993, DEC scrapped XCON entirely, marking the end of the expert systems boom.

Why Did Expert Systems Fail?

- Brittleness: They couldn’t adapt to new problems without manual rule updates.

- Cost: Maintaining large rule-based systems was prohibitively expensive.

- Competition: Machine learning (neural networks) was quietly advancing in the background.

The Quiet Rise of Machine Learning

While symbolic AI was collapsing, a small group of researchers kept neural networks alive—despite minimal funding and skepticism.

1. The Backpropagation Breakthrough (1986)

- Geoff Hinton, David Rumelhart, and Ronald Williams published a landmark paper proving that backpropagation (a method for training neural networks) could solve complex problems.

- Why It Mattered: Before this, neural networks were seen as impractical. Backpropagation made them trainable on real-world data.

2. Yann LeCun and the Birth of Modern Computer Vision (1989)

- LeNet-1: LeCun developed the first convolutional neural network (CNN) capable of recognizing handwritten digits.

- Impact:

- This was the precursor to today’s AI vision systems (like facial recognition and self-driving cars).

- Yet, in the 1990s, few investors cared—AI was still in its winter.

3. Why Did Neural Networks Survive the Winter?

- Cheaper Hardware: Unlike Lisp machines, neural networks could run on standard computers.

- Scalability: Unlike expert systems, they learned from data instead of relying on hand-coded rules.

- Underground Research: Universities like UT Austin and Carnegie Mellon kept ML research alive with minimal funding.

Key Takeaways from the Second AI Winter

- Overhyped Hardware Fails: Lisp machines promised too much and delivered too little.

- Rule-Based AI Doesn’t Scale: Expert systems like XCON collapsed under their own complexity.

- The Future Was Machine Learning: Neural networks, though ignored in the 1990s, laid the foundation for today’s AI revolution.

The Thaw: GPUs, Big Data, and the 2012 AlexNet Breakthrough – How AI Finally Defrosted

Moore’s Law Meets AI: The Hardware Revolution

1. The GPU Gambit: From Gaming to AI Supercomputing

By the early 2000s, AI researchers faced a critical bottleneck: computing power. Traditional CPUs (central processing units) were too slow to train large neural networks efficiently. But an unlikely hero emerged: graphics processing units (GPUs).

- Why GPUs?

- Originally designed for rendering video game graphics, GPUs had thousands of tiny cores optimized for parallel processing—perfect for the matrix calculations neural networks rely on.

- In 2006, NVIDIA released CUDA, a programming framework that let researchers repurpose GPUs for scientific computing.

- The Impact

- Training times for neural networks plummeted from weeks to days.

- Suddenly, experiments that were previously impractical (like deep convolutional networks) became feasible.

2. Big Data: The Fuel for the AI Fire

Hardware alone wasn’t enough—AI needed vast amounts of labeled data to learn from. The rise of the internet provided it.

- ImageNet: The Dataset That Changed Everything

- Created by Stanford researcher Fei-Fei Li in 2009, ImageNet contained 14 million hand-labeled images across 20,000 categories.

- For the first time, AI systems had enough data to generalize rather than just memorize.

- The Data Explosion

- Social media (Facebook, Flickr), e-commerce (Amazon product images), and digitized books (Google Books) provided petabytes of training material.

- This marked a shift from rule-based programming to data-driven learning.

The AlexNet Revolution (2012): The Shot Heard Round the AI World

What Actually Happened?

In the 2012 ImageNet Large Scale Visual Recognition Challenge (ILSVRC), a relatively unknown team from the University of Toronto submitted a neural network called AlexNet—named after its creator, Alex Krizhevsky. The results stunned the computer vision community:

- Performance Leap

- AlexNet reduced classification errors by 41% compared to the next-best entry.

- Where traditional computer vision approaches plateaued at ~25% error, AlexNet achieved 15.3%.

- Technical Breakthroughs

- Used ReLU activation functions (faster training than sigmoid).

- Implemented dropout regularization to prevent overfitting.

- Ran across two NVIDIA GTX 580 GPUs (3GB VRAM each) to handle the workload.

Why This Was the Tipping Point

- Proved Deep Learning’s Superiority

- Showed neural networks could outperform human-engineered features for image recognition.

- Ignited the AI Gold Rush

- Within months, Google acquired Krizhevsky’s startup for $44 million.

- Tech giants began aggressively hiring deep learning researchers.

- Changed Research Priorities

- Universities worldwide shifted focus from symbolic AI to deep learning.

Case Study: Deep Blue vs. Kasparov (1997) – The First Hint of Thaw

The Historic Match

In May 1997, IBM’s Deep Blue supercomputer defeated reigning world chess champion Garry Kasparov in a six-game match (3.5–2.5).

- Technical Specs

- 30-node IBM RS/6000 SP supercomputer.

- 11.38 GFLOPS processing power (14,000 chess positions/sec).

- Evaluated 200 million positions per move.

Lasting Impact

- Restored Faith in AI

- After the AI winters, this very public victory proved machines could outperform humans in complex reasoning tasks.

- Brute Force Works

- Deep Blue had no intuition—it won through raw calculation, foreshadowing today’s data-centric AI.

- Corporate Investment

- IBM’s success paved the way for Google, Facebook, and others to invest heavily in AI.

The Lesson for Modern AI

Deep Blue showed that narrow, specialized systems could achieve superhuman performance—a philosophy that later birthed:

- AlphaGo (2016) for Go

- GPT-3 (2020) for language

The Post-2012 Landscape: Winter to Spring

The combination of GPUs + big data + AlexNet created a perfect storm:

| Year | Milestone | Significance |

|---|---|---|

| 2014 | Google acquires DeepMind | Corporate arms race begins. |

| 2015 | TensorFlow released | Democratized deep learning tools. |

| 2016 | AlphaGo defeats Lee Sedol | AI masters intuition-based games. |

| 2020 | GPT-3 | Proves scaling = capability. |

Lessons from the AI Winters: How History Guides Tomorrow’s AI

The AI winters weren’t just failures—they were stress tests that revealed how fragile technological progress can be. Today, as we ride the generative AI wave, these lessons are more relevant than ever.

Lesson 1: Avoid Overpromising – The Hype Trap

Then: The Dartmouth Delusion (1956)

At the legendary Dartmouth Conference, pioneers like McCarthy and Minsky boldly claimed:

“Human-level AI will be solved in a decade.”

What Went Wrong?

- They underestimated the complexity of general intelligence.

- Rule-based systems (e.g., SHRDLU) worked in toy environments but failed in the real world.

- By the 1970s, unmet expectations led to the first AI winter.

Now: The Generative AI Bubble (2023–?)

- Companies promise AGI-like capabilities from LLMs (e.g., “ChatGPT can reason”).

- Reality: Even GPT-4 hallucinates facts and lacks true understanding.

- Risk: If generative AI fails to deliver real business value, another funding collapse could follow.

Key Takeaway:

“Underpromise, overdeliver.” Focus on measurable outcomes (e.g., “Our AI reduces customer support time by 30%”) rather than sci-fi claims.

Lesson 2: Embrace Incrementalism – Small Wins Matter

Then: MYCIN’s Life-Saving Narrow Focus (1976)

- What It Did: Diagnosed bacterial infections with 65% accuracy (better than human doctors).

- Why It Survived:

- Solved one critical problem (blood infections).

- Didn’t pretend to be “general AI.”

Now: MidJourney’s Art Revolution (2022–Present)

- Unlike broad “AI art” tools, MidJourney focused on creatives:

- Hyper-optimized for concept artists.

- Ignored unrelated use cases (e.g., medical imaging).

- Result: Profitability within a year, while broader competitors floundered.

Key Takeaway:

“Niche beats generic.” Startups like Anthropic (focused AI safety) and Stability AI (open-source models) thrive by doing one thing well.

Lesson 3: Follow the Hardware – Compute is Destiny

Then: GPUs Saved AI (2006–2012)

- Problem: Neural networks were theoretically sound but too slow to train.

- Breakthrough: NVIDIA’s CUDA made GPUs accessible for AI.

- Impact: AlexNet’s 2012 win would’ve been impossible without GPU acceleration.

Future: Quantum Computing’s Double-Edged Sword

- Potential: Quantum ML could break encryption or simulate molecules.

- Risk: Overhype (e.g., “Quantum AI will solve everything by 2030!”) could trigger disillusionment.

Key Takeaway:

“Hardware enables, but hype destroys.” Track real compute advancements (e.g., Cerebras’ wafer-scale chips) over vaporware.

Timeline: AI’s Resilience Through Winters

| Year | Event | Impact |

|---|---|---|

| 1973 | Lighthill Report | Exposed AI’s limitations; funding slashed. |

| 1987 | Lisp Machine collapse | Symbolic AI died; neural networks emerged. |

| 1997 | Deep Blue beats Kasparov | Proved narrow AI could outperform humans. |

| 2012 | AlexNet wins ImageNet | Deep learning revolution began. |

| 2023 | Generative AI boom | New hype cycle—will it crash or endure? |

Conclusion: Winter is Coming (Again)

Today’s generative AI boom mirrors 1956’s Dartmouth optimism and 1980s Lisp machine hype. The pattern is clear:

- Overpromising → Backlash (e.g., “ChatGPT is just autocomplete”).

- Ethical shortcuts → Regulatory crackdowns (e.g., EU AI Act).

Geoffrey Hinton’s Warning:

“We’re entering a new winter if we ignore AI ethics and safety.”